Published on June 15, 2023

I found the article titled "Designing AI for All: Addressing Bias in Artificial Intelligence Systems" to be very interesting because it highlights the issue of bias in AI systems and the importance of designing AI algorithms that are fair and unbiased. The article explains how biases can be inadvertently introduced into AI systems and the potential consequences of these biases. It also provides insights into the steps that can be taken to mitigate bias and promote fairness in AI systems. As an aspiring data analyst, understanding and addressing bias in AI systems is crucial for me to ensure that the insights and recommendations I provide are unbiased and ethical.

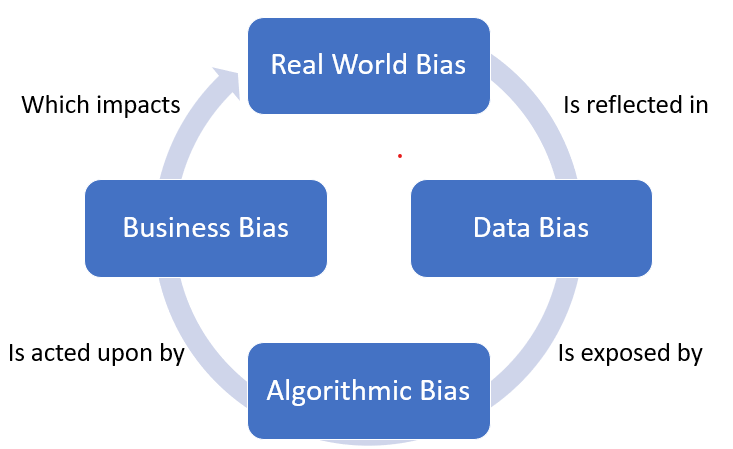

Artificial intelligence (AI) is revolutionizing our lives, from virtual assistants like Siri and Alexa to self-driving cars. However, the pervasive nature of AI does not make it immune to bias. Bias in AI refers to the systematic skewing of predictions and decisions in favor of or against particular groups. This bias can stem from the training data, algorithms, and the biases of designers and developers. Addressing bias is crucial to ensure fairness, integrity, and equitable outcomes in AI systems.

The Consequences of Bias in AI:

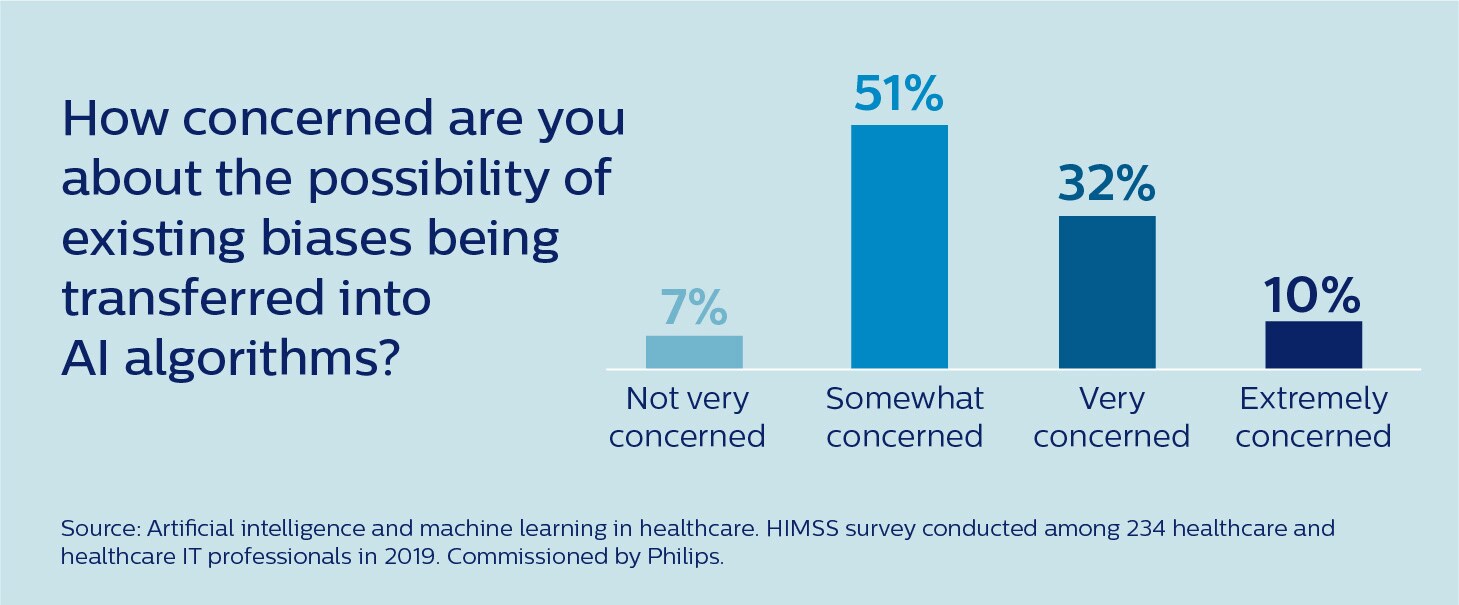

Bias in AI systems can have far-reaching consequences. Flawed or misleading insights and conclusions can emerge when AI systems are biased. This can lead to inadequate options that impact individuals and organizations. Moreover, biased AI systems can perpetuate existing societal inequities, exacerbating unfairness and inequality. Trust in AI technology is eroded when it contributes to biased outcomes. To address bias effectively, a careful approach is needed, encompassing data collection, diverse development teams, regular audits, and ongoing training.

Challenges in Addressing Bias:

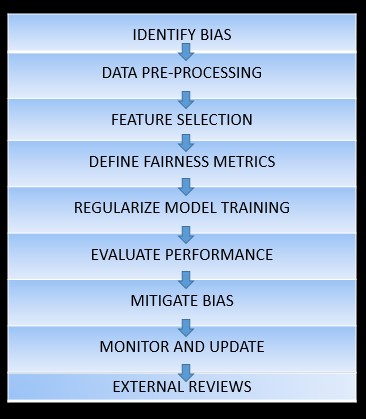

Detecting bias in AI systems can be challenging due to their complexity and opacity. To overcome this challenge, it is essential to have diverse teams working on AI development while prioritizing ethical considerations. Ensuring fairness and integrity in AI design and implementation requires proactive bias mitigation from the early stages of development. Developing algorithms that incorporate fairness considerations can help minimize bias. Techniques such as data preprocessing, algorithm adjustments, and fairness evaluation metrics contribute to bias reduction. Additionally, transparency measures, accessibility of data and algorithms, and clear guidelines and regulations promote fair and responsible AI use.

We would love to hear your thoughts! Please feel free to leave a comment below